Centralized log management is very important for any tech company of any size. For larger companies, entire company logs need not be centralized but can be segmented based on department or product etc…

Background in the context of ALight Technology And Services Limited

ALight Technology And Services Limited is both product and service based company. Currently offers two completely free products – SimplePass and PodDB. With SimplePass, I am not worried much because except for the code there is no data on the server and obviously no customer specific data. With PodDB the risk is slightly higher because there is data but no customer specific data. As of now the AWS account, servers are very highly secured with immediate alerts on login into AWS console or servers, new EC2 instances, instance terminations etc… With the infrastructure, access to infrastructure being secured, the next step is external threats and being able to respond to external threats. These are very important steps prior to developing any products that would possibly contain customer data. What if someone tries to hack by sending malicious payload or DOS (Denial of Service) or DDOS (Distributed Denial of Service)? For identifying, mitigating, preventing such things it’s very important to have proper log management techniques, monitoring of metrics, proper alerts and proper action plan / business continuity plan when such incidents occur. Even if such a thing happened, it’s very important to have logs so that computer forensics can be performed. No company is going to offer free products for ever without generating revenue, in a similar way ALight Technology And Services Limited does have plans of developing revenue generating products or offer services such as architecting, development, hosting etc… Compared with modern days powerful hacking equipment of the anonymous group that calls them the “eyes” (don’t get confused with the intelligence “five eyes”, as a matter of fact the anonymous “eyes” are targeting the five countries that formed the “five eyes” and any whistleblowers like me in this context – I am the whistleblower (but not R&AW) of India’s R&AW equipment capabilities and the atrocities that have been done by the R&AW spies against me), the current state of information security standards are much below.

I have looked into 3 solutions and each of these solutions had strengths and benefits.

What I was looking for:

For example – PodDB has web server logs (NGinx), ASP.Net Core web application logs, and a bunch more of logs from microservice that interacts with the database, microservice that writes some trending data, microservices that queries solr etc… So my log sources are multiple and I want to aggregate all of these along with other logs such as syslog, mariadb audit log etc…

- AWS Cloudwatch:

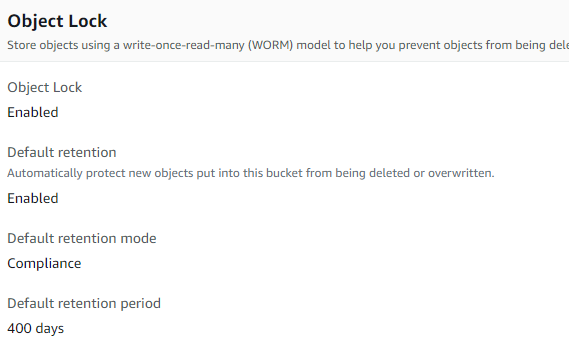

CloudWatch allows easy ingestion, very high availability, metrics, alarms etc… 5GB per month of log ingestion for free. However, live tailing of the logs i.e being able to see logs as they soon as they are ingested is a bit problematic. Even querying / viewing across log groups is a bit problematic. The strength is the definable retention period for each log group. Once ingested the logs cannot be modified, so definitely a great solution if storing logs for compliance reasons. AWS should consider introducing data storage tiers like S3 data storage i.e lifecycle transition – hot logs can be queried and definable period, then lifecycle transition and logs would be stored for archival purpose for some period and then deleted.

2. ELK Stack:

ELK stack consists of ElasticSearch, LogStash and Kibana. ElasticSearch for full-text search capabilities, LogStash for log ingestion, KIbana for visualization. This review is about the self-hosted version. The ELK stack has plenty of features and very easy management if the application and all of it’s components can be properly configured. Built-in support for logs, live tailing of logs, metrics etc… Easier management using ElasticAgents i.e ElasticAgents can be installed on multiple machines and what data should be ingested by each agent can be controlled by the web interface. However, ELK stack seemed a bit heavy in computing resource consumption and for whatever reason, LogStash crashed several times and the system crashed i.e the EC2 instance just hanged, couldn’t even restart. ELK Stack supports, hot and cold log storages i.e the past 15 – 30 days of logs can be kept in the hot storage and the older logs can be automatically moved into cold tier i.e not queried frequently but are kept for various reasons.

3. Graylog:

This is about self hosted version of Graylog. Graylog focuses only on log management. Very easy to setup and ingest logs. Easy querying of logs. No support for metrics. Graylog allows creating snapshots of older data which can be stored elsewhere, restored and searched on a necessity basis.

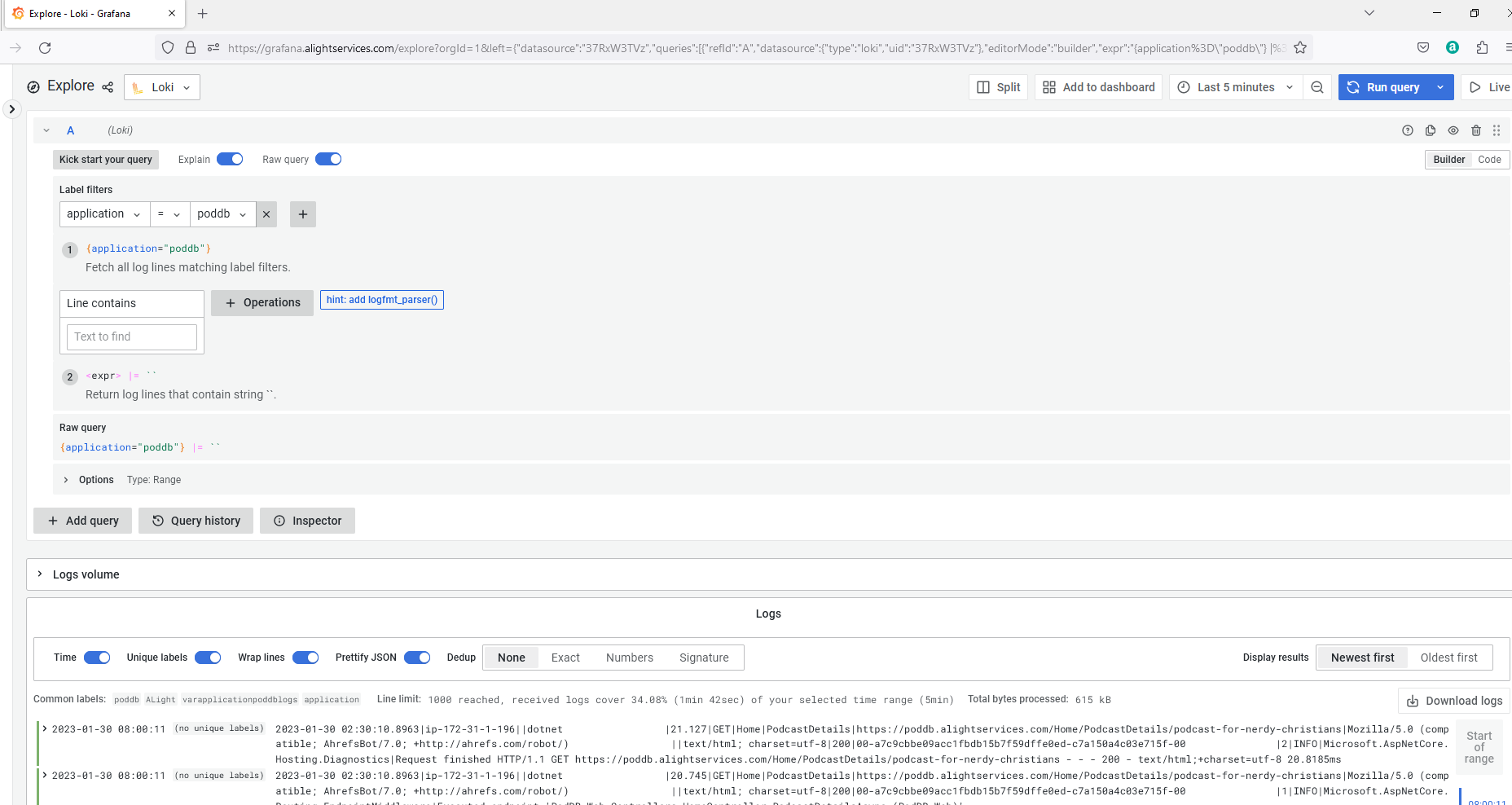

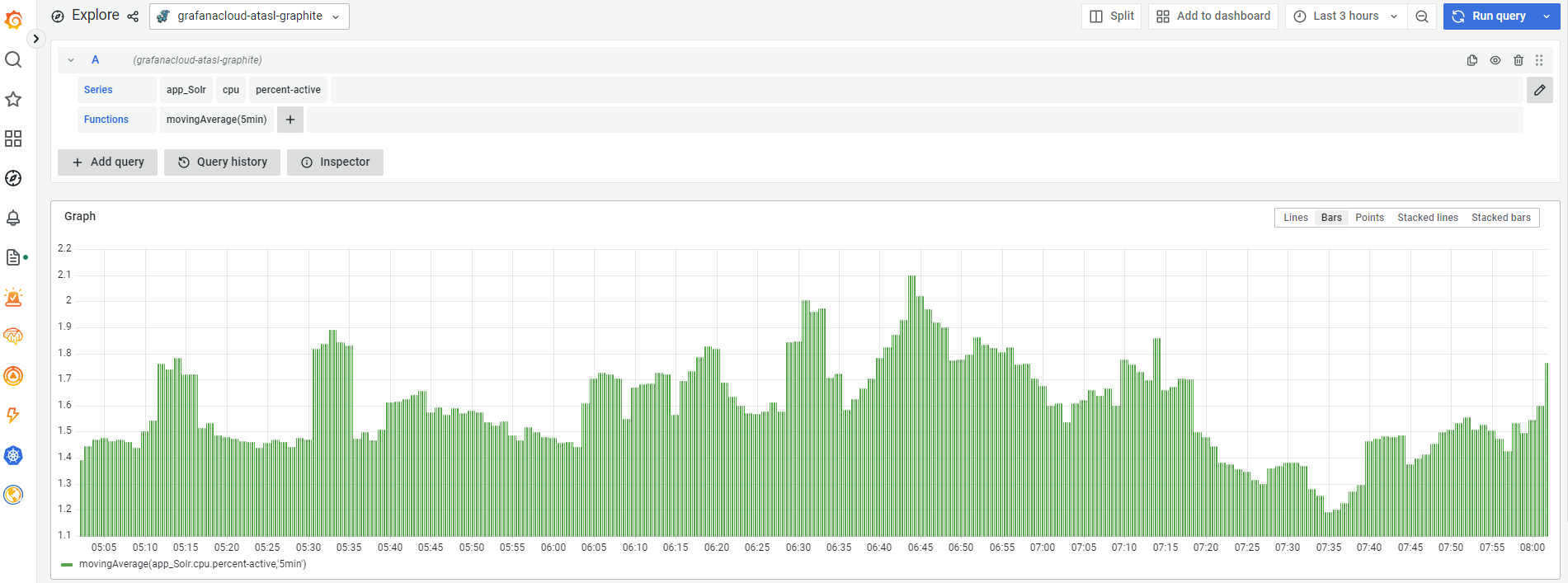

4. Grafana

This is about the free Grafana account. Grafana offers very generic 50GB log ingestion per month. Logs can be easily ingested into Loki and viewed from Grafana. Metrics can be ingested into Graphite and viewed. Very easy to setup alerts. I have not tried yet but the free tier has 50GB of traces ingestion per month. One of the very best features I liked about Grafana is easy way of tagging logs. If log sources are properly tagged, combining and viewing multiple log sources is very very easy.

Thank you Grafana for such a generous free tier and such a great product.

There seems to be no control of retention period. Grafana paid subscription has control of retention period. The paid version starts at $8 per month. I do have plans about signing up for paid account just before launching commercial products specifically for planning retention i.e either Grafana can store the older logs for few extra months on my behalf or if they can provide a solution to upload into S3 glacier and of course when needed being able to restore from S3 Glacier and being able to search, because storing old logs in S3 Glacier and if there is no way of restoring and searching then the entire purpose of storing old logs would not make sense.

–

Mr. Kanti Kalyan Arumilli

B.Tech, M.B.A

Founder & CEO, Lead Full-Stack .Net developer

ALight Technology And Services Limited

Phone / SMS / WhatsApp on the following 3 numbers:

+91-789-362-6688, +1-480-347-6849, +44-07718-273-964

kantikalyan@gmail.com, kantikalyan@outlook.com, admin@alightservices.com, kantikalyan.arumilli@alightservices.com, KArumilli2020@student.hult.edu, KantiKArumilli@outlook.com and 3 more rarely used email addresses – hardly once or twice a year.