Cross-Post: https://www.linkedin.com/pulse/introduction-vector-databases-having-llms-generate-arumilli-kg0tc

In previous posts, I have mentioned what vector embeddings are, the need for embeddings, chunking text, some methods for chunking, and the importance of maintaining context. This post serves as an introduction to vector databases.

There are several databases that support storing and retrieving vector embeddings. Some dedicated vector databases include Chrome, QDrant, Vespa, LanceDB, etc. Others such as Solr, PostgreSQL, MongoDB Atlas (not the free community version), and Redis Cache also support vectors to varying

degrees.

Some important considerations when deciding on a vector database, similar to any other database:

1) Client SDK availability or using microservices

2) Sharding of data, replication for higher availability, and horizontal scaling

3) Ease of making backups of databases, creating snapshots, and restoring them

4) Types of vectors supported by the database

Some databases are easy to get started with but may not be suitable for production environments that require sharding, replication, and horizontal scaling.

Once data gets chunked, stored and indexed. The next steps are retrieving appropriate documents from the databased based on the query (embeddings need to be generated for the query), and passing to LLM’s.

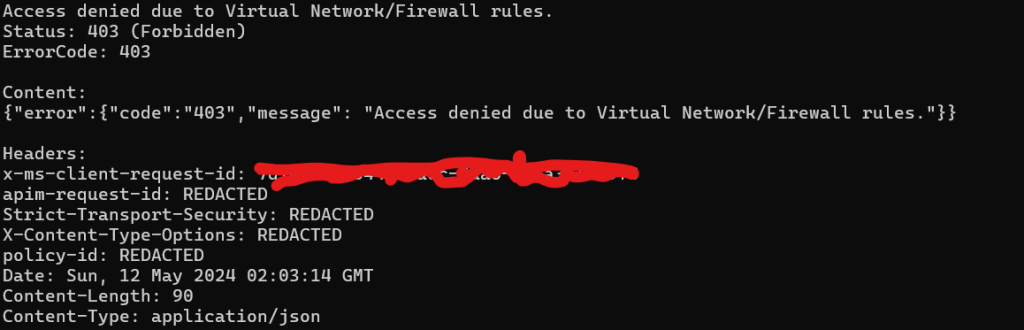

But in production scenarios few extra steps:

- Using tools such as LLM Guards to prevent malicious usage i.e well crafted query can be a prompt requesting to override the system message of an LLM – these are known as Prompt Injection.

- Having LLMs respond in a manner where the output can be parsed

- Caching of responses

- In some scenarios running output scanners on the response

WebVeta does this and much more and even cost controls. WebVeta can be easily integrated into any website by just using few lines of HTML!

https://webveta.alightservices.com

WebVeta running a KickStarter campaign, join the KickStarter campaign and get 25 – 40% discounts, price lock for at least 3 years.

https://www.kickstarter.com/projects/kantikalyanarumilli/webveta-power-your-website-with-ai-search

–

Mr. Kanti Kalyan Arumilli

B.Tech, M.B.A

Founder & CEO, Lead Full-Stack .Net developer

ALight Technology And Services Limited

Phone / SMS / WhatsApp on the following 3 numbers:

+91-789-362-6688, +1-480-347-6849, +44-07718-273-964

kantikalyan@gmail.com, kantikalyan@outlook.com, admin@alightservices.com, kantikalyan.arumilli@alightservices.com, KArumilli2020@student.hult.edu, KantiKArumilli@outlook.com and 3 more rarely used email addresses – hardly once or twice a year.