Have you ever wondered how machines truly understand the meaning of words? While they lack the nuanced comprehension we humans possess, they’ve learned to represent words as numerical vectors, opening a world of possibilities for tasks like search, translation, and even creative writing.

Cross Post – https://www.linkedin.com/pulse/introduction-vector-embeddings-kanti-kalyan-arumilli-wqg8c

Imagine each word in the English language as a point in a multi-dimensional space. Words with similar meanings cluster together, while those with contrasting meanings reside farther apart. This is the essence of vector embeddings: representing words as numerical vectors, capturing semantic relationships between them.

These vectors aren’t arbitrary; they’re learned through sophisticated machine learning algorithms trained on massive text datasets.

Vector embeddings revolutionize how machines process language because:

Semantic Similarity: Words with similar meanings have vectors that are close together in the “semantic space.” This allows machines to identify synonyms, antonyms, and even subtle relationships between words.

Contextual Understanding: Capturing the nuanced meaning of a word based on its surrounding words.

Improved Performance: Embedding vectors as input to machine learning models often leads to significant performance gains in tasks like text classification, sentiment analysis, machine translation and neural search.

There are various types such as Dense, Sparse and Late Interaction. In each type there are several models trained on various datasets, fine-tuned on different datasets. The computational expenses and requirements are significantly different. Some models need high cpu, memory yet underperform and some models need lesser cpu and memory and yet perform well. However, based on the dataset and number of tokens used for generation, models trained on same datasets and higher number of tokens usually outperform models trained on same datasets and lower number of tokens.

Here is a very interesting link – https://huggingface.co/spaces/mteb/leaderboard

The above page lists several models, memory requirements, scores for various tasks, size of embeddings generated etc… Most of these models are free under MIT license and some are commercial.

In the past I have written a blog post about https://www.alightservices.com/2024/04/27/how-to-get-text-embeddings-from-meta-llama-using-c-net/ converting llama 2 / 3 into gguf and how to interact using C#.

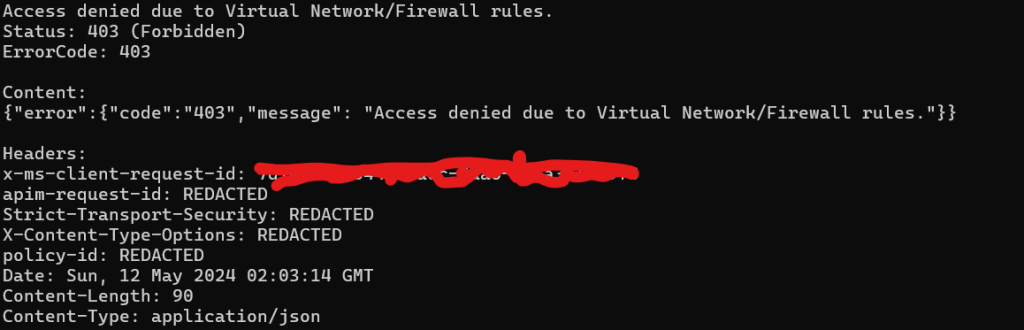

Most of the free models mentioned in the above leaderboard have gguf and can be directly used from C# or via free HTTP local server for getting embeddings such as ollama, llama.cpp. But some models don’t have gguf, probably some can be converted or some might not. Some models have onnx format available. Some might need python code for generating embeddings. I have tried IronPython. But not suggesting IronPython or any 3rd party wrappers because of less reliability. Here is a blog post mentioning about Python integration from .Net https://www.alightservices.com/2024/04/09/c-net-python-and-nlp-natural-language-processing/

–

Mr. Kanti Kalyan Arumilli

B.Tech, M.B.A

Founder & CEO, Lead Full-Stack .Net developer

ALight Technology And Services Limited

Phone / SMS / WhatsApp on the following 3 numbers:

+91-789-362-6688, +1-480-347-6849, +44-07718-273-964

kantikalyan@gmail.com, kantikalyan@outlook.com, admin@alightservices.com, kantikalyan.arumilli@alightservices.com, KArumilli2020@student.hult.edu, KantiKArumilli@outlook.com and 3 more rarely used email addresses – hardly once or twice a year.